Summary

This work began with a customer request to support secure upcycling, a process for verifying that computers had been properly sanitized so they could be safely reused or resold. This was to be done by comparing out-going machines against cleaned examples.

At the time, the product already had a related security capability, referred to internally as the comparison engine. It evaluated machines by comparing expected values against observed values to detect malicious changes. While the comparison capability was similar to the secure upcycling need, it functioned as an opaque, system-level mechanism in the product, offering limited transparency, limited control, and little ability for users to understand or explain how evaluations were performed.

The fastest path forward was to take an existing script-based proof of concept and productize it so the customer could run the same checks directly in the product. That approach would have met the immediate request, but it would have reinforced existing limitations by adding another narrow workflow alongside an already hard-to-explain system.

My decision was to treat secure upcycling as a specific expression of comparison and use the request to strengthen the comparison engine itself. This was not an incremental UI decision, but a deliberate choice to avoid shipping a fast solution that would have locked the product into another opaque workflow. By first making the underlying comparison model explicit and aligning configuration and evaluation output around that model, this work unified two disconnected capabilities into a single, coherent system.

With this integration, secure upcycling became the entry point that brought the customer into the full product, creating an opportunity to demonstrate and adopt the broader platform rather than a single standalone feature. Additionally, it improved the product for other customers and would be a sellable draw for potential users of either feature.

My Role

I was responsible for defining the system-level direction of the capability: deciding whether to ship a narrow solution quickly or invest in a model that would support multiple workflows over time.

My focus was on clarifying the comparison model, determining how it should surface in the product, and shaping an approach that engineering could replicate consistently. This took the opportunity to identify conceptual overlap and deliver a structure that resolved immediate needs while improving existing use cases.

Capability Evolution

Secure Upcycling

The initial secure upcycling workflow existed as a script-based proof of concept. It verified that hardware had been

properly cleaned, but it required hands-on engineering involvement, was difficult to support, and could not be easily

explained or demonstrated. While effective, it lived entirely outside of the product’s structure.

Comparison Engine

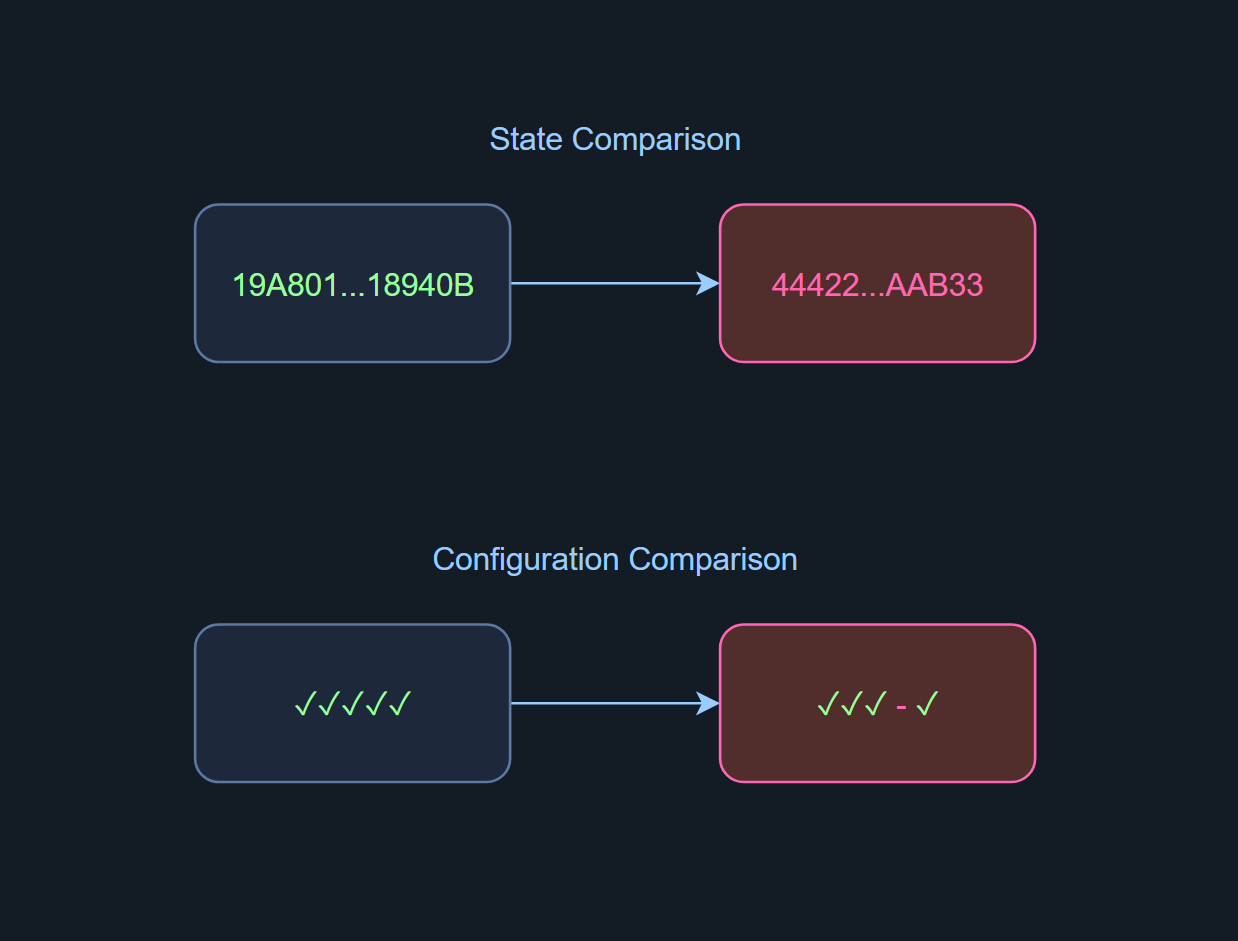

The product already monitored machines against a known good state to detect unexpected changes of hardware and its settings.

Although technically sound, the comparison capability was primarily a simple pass / fail outcome. This lack of visibility

made the capability difficult to demonstrate, hard to trust in audits, and hid any opportunities for environment specific

self-customization. Specifically, users lacked clarity into:

- what was being evaluated

- what was being evaluated against

- what differences were determined

- what limitations applied to the evaluation

The upcycling and comparison engine approaches addressed related problems but remained isolated, one explicit but fragile, the other productized but difficult to understand and control. Simply embedding the upcycling script with a pass / fail outcome would have added another narrow path without improving the underlying system.

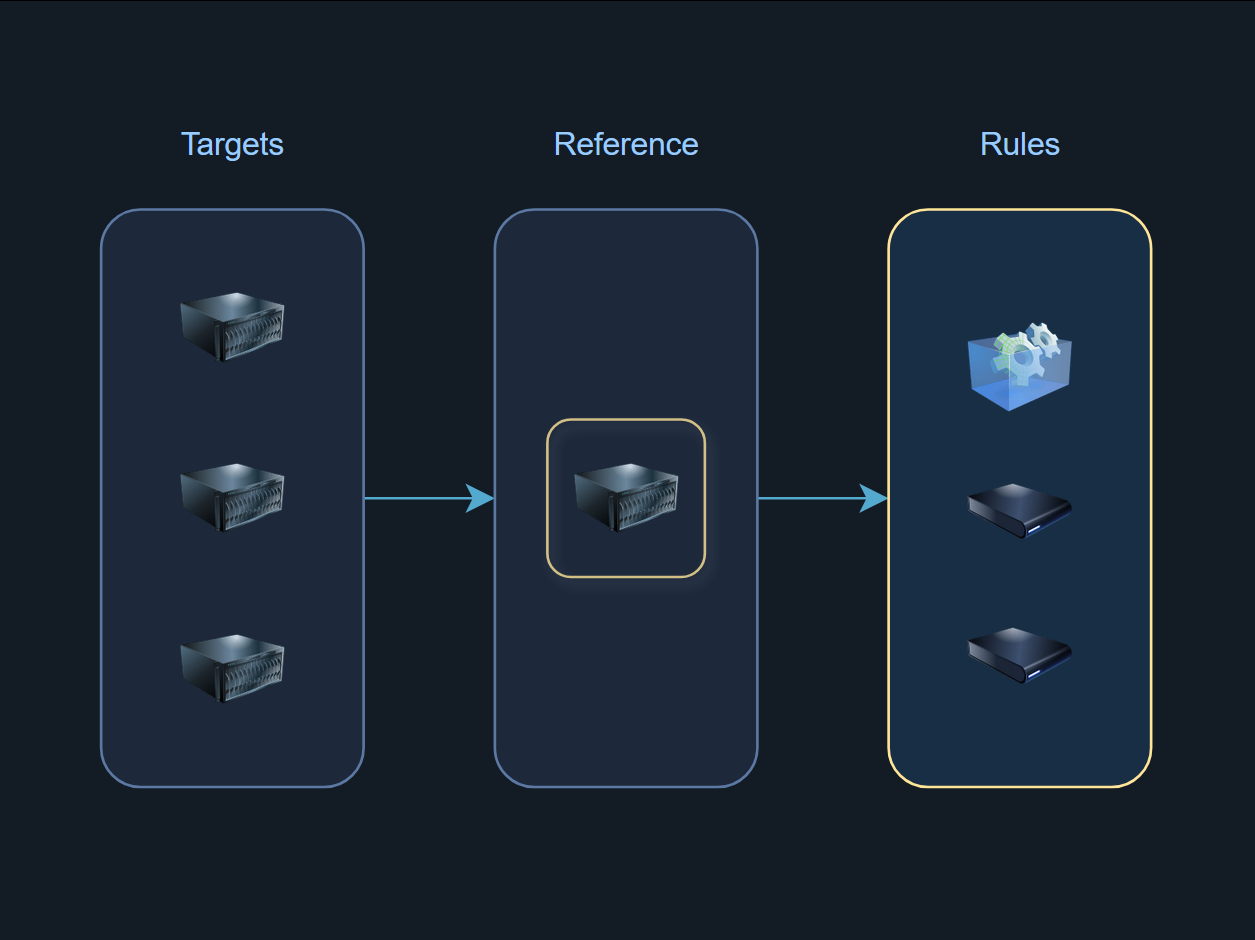

The alternative was to make the underlying comparison model explicit and use it consistently across workflows. Comparison was structured around three, user-visible first-class elements:

- Targets

Machines being evaluated. - References

Prepared machines or assets representing the expected state. - Rules

Checks that define what is evaluated and how differences are interpreted.

By naming and separating these concepts, I made comparison into a workflow users could understand, configure, and explain rather than a hidden internal mechanism. Secure upcycling then became one clear use case of a model, rather than a special-case feature.

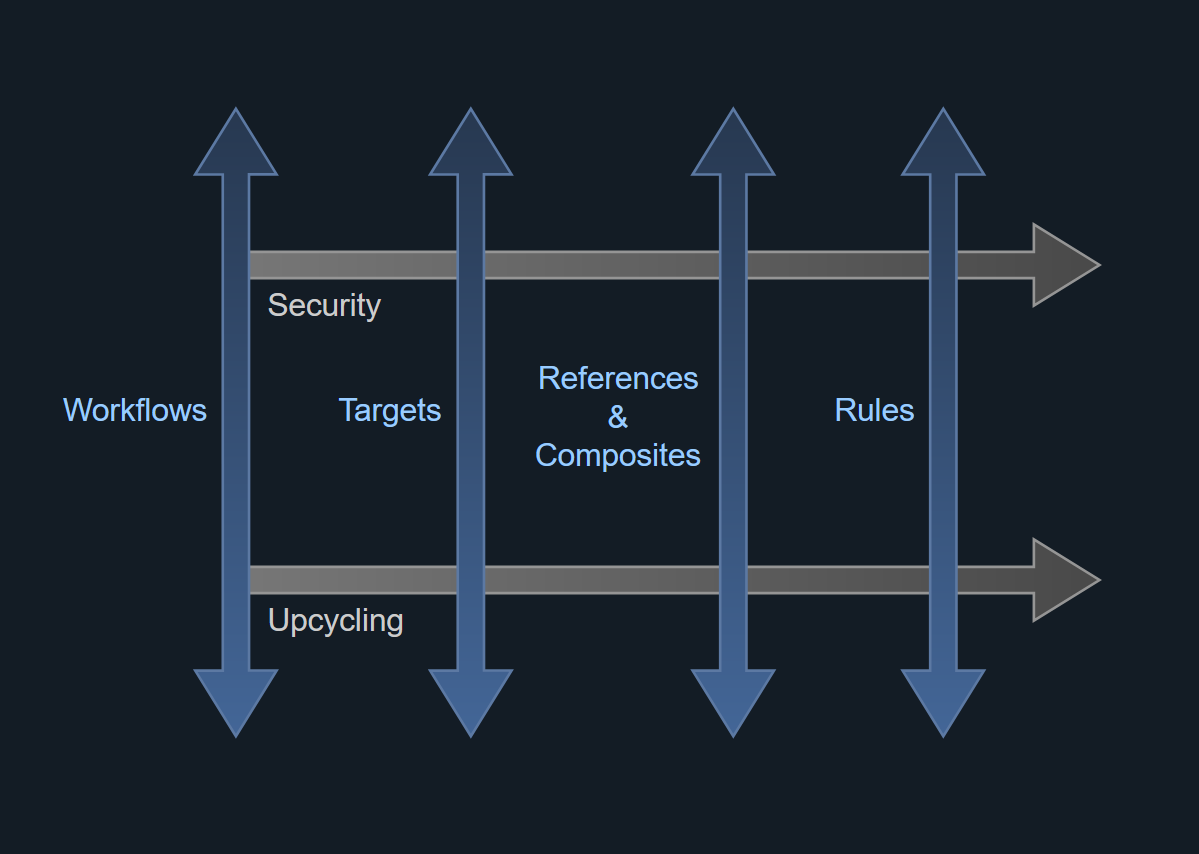

Designing for Scale

Once the comparison model was explicit, the comparison engine would support multiple use cases, including security validation and secure upcycling, through the same underlying structure.

Each dimension could vary independently:

- Workflows spanning different driving needs, which in turn influenced the other dimensions

- Targets selected via filters, attributes, groups, or manual selection

- References sourced from prepared machines, uploaded assets, or vendor defaults

- Reference composites created by merging multiple machines into a single expected state

- Rules grouped by use case and enabled or disabled individually

This structure made it possible to introduce new workflows without revisiting the underlying system. What previously required scripts and manual explanation would now be expressed through configuration, making the capability easier to adopt, demonstrate, and extend.

Configuration and Evaluation Output

The presentation layer in the UI was designed to mirror the comparison model directly.

Configuration followed a step-based flow that made each comparison decision explicit: selecting targets, choosing references, and defining rules. This structure allowed administrators to reason clearly about how comparisons were defined and to configure them with confidence.

The evaluation output followed the same structure. Instead of a single pass or fail indicator, users could drill down to see which elements were evaluated, expected versus observed values, and where evaluation was limited. Because the structure had symmetry with configuration, non-admin users could understand and act on the output without needing additional explanation, even when they were not part of setup

This shared structure reduced reliance on external resources and made the capability usable across different roles, from administrators defining comparisons to operators reviewing outcomes.

Outcome

The approach was reviewed across product, engineering, sales, and executive leadership before being shared with the customer. The secure upcycling workflow was approved as a product feature because it addressed the immediate request while strengthening a core capability and aligning with future goals.

By choosing to invest in the comparison model rather than shipping a narrow solution, the feature became easier to explain, credible as an upsell, and applicable beyond a single customer. The workflow now reduced reliance on engineering and support for explanation, troubleshooting, and demonstration. A request that could have resulted in a one-off implementation instead moved the platform forward in a reusable way.